Image: AMD

Image: AMD

AMD announced the Ryzen 8040 series of laptop processors at the company’s AI-themed event, reframing what has been a conversation about CPU speed, power, and battery life into one that prioritizes AI.

In January, AMD launched the Ryzen 7000 family, of which the Ryzen 7040 included the first use of what AMD then called its XDNA architecture, powering Ryzen AI. (When rival Intel disclosed its Meteor Lake processor this past summer, Intel began referring to the AI accelerator as an NPU, and the name stuck.)

More than 50 laptop models already ship with Ryzen AI, executives said. Now, it’s on to AMD’s next NPU, Hawk Point, inside the Ryzen 8040. In AMD’s case, the XDNA NPU assists the Zen CPU, with the Radeon RDNA architecture of the GPU powering graphics. But all three logic components work harmoniously, contributing to the greater whole.

Just be aware: AMD executives referred to the Ryzen 8000 family as “architecturally aligned” to the previous Ryzen 7000 generation, meaning that the AI improvements are probably the most significant change.

“We view AI as the single most transformational technology of the last ten years,” said Dr. Lisa Su, AMD’s chief executive, in kicking off AMD’s “Advancing AI” presentation on Wednesday.

Now, the fight is being waged across several fronts. While Microsoft and Google may want AI to be computed in the cloud, all the heavyweight chip companies are making a case for it to be processed locally, on the PC. That means finding applications that can take advantage of the local AI processing capabilities. And that means partnering with software developers to code apps for specific processors. The upshot is that AMD and its rivals must provide software tools to enable those applications to talk to their chips.

Naturally, AMD, Intel, and Qualcomm want those apps to run most effectively on their own silicon, so the chip companies must compete on two separate tracks: Not only do they have to produce the most powerful AI silicon, they must also ensure app developers can code to their chips in the most efficient manner possible.

AMD

AMD

AMD

Silicon makers have tried to entice game developers to do the same for years. And though AI can seem impenetrable from the outside, you can tease out familiar concepts: quantization, for example, can be seen as a form of data compression to allow large-language models that normally run on powerful server processors to reduce their complexity and run on local processors like the Ryzen 8000 series. Those kind of tools are critical for “local AI” to succeed.

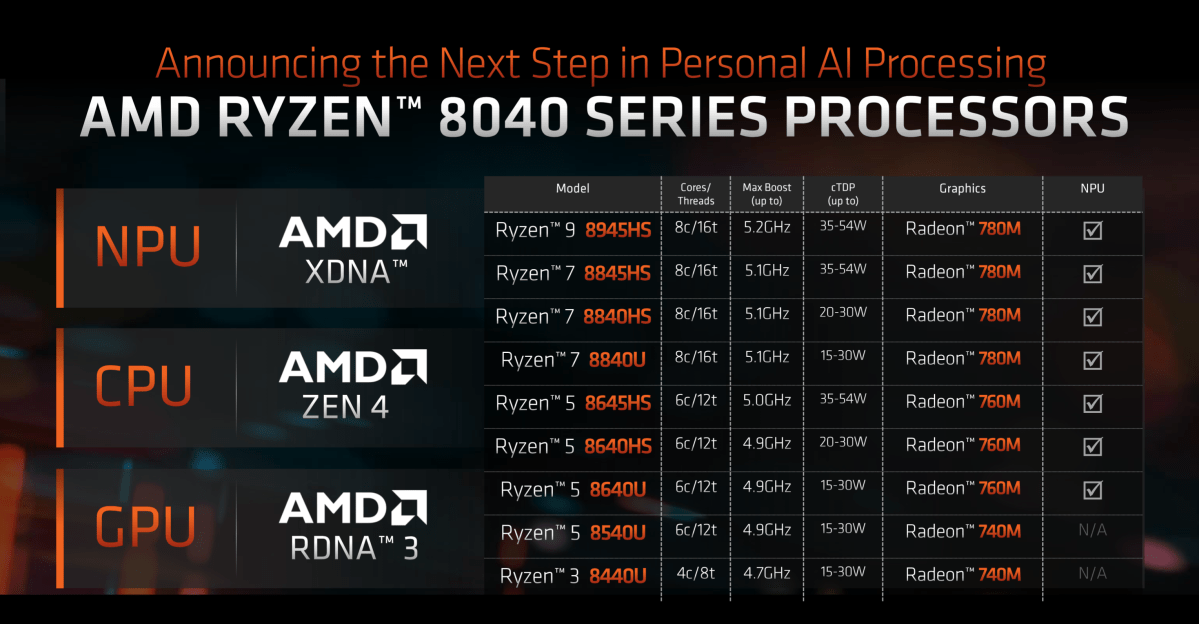

Meet the eight Ryzen 8040 mobile processors

Like many chips, AMD is announcing the Ryzen 8040 series now, but you’ll see them in laptops beginning next year.

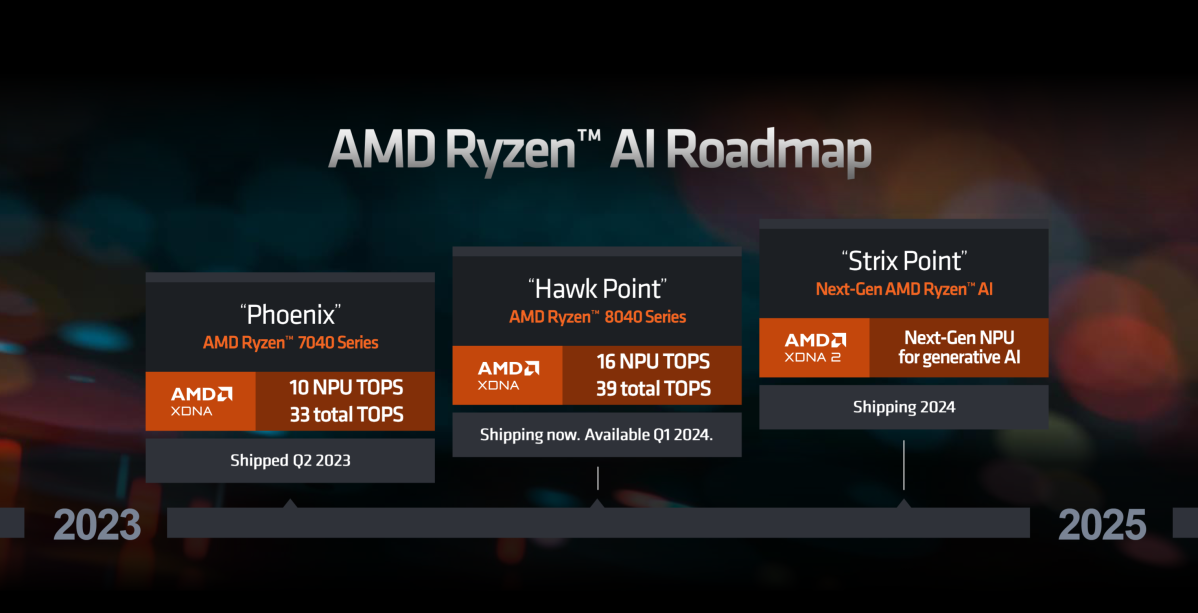

The Ryzen 8040 series combines AMD’s Zen 4 architecture, its RDNA 3 GPUs, and (still) the first-gen XDNA architecture together. But the new chips actually use AMD’s second NPU. The first, “Phoenix,” included 10 NPU trillions of operations per second (TOPS) and 33 total TOPS—with the remainder coming from the CPU and the GPU. The 8040 series includes “Hawk Point,” which increases the NPU TOPS to 16 TOPS with 39 TOPS in total.

Donny Woligroski, senior mobile processor technical marketing manager, told reporters that the CPU uses AVX-512 VNNI instructions to run lightweight AI functions on the CPU. AI can also run on the GPU, but at a high, inefficient power level—a stance we’ve heard from Intel, too.

“When it comes to efficiency, raw performance isn’t enough,” Woligroski said. “You’ve got to able to run this stuff in a laptop.”

There are nine members of the Ryzen 8040 series. The seven most powerful include the Hawk Point NPU. They vary from 8 cores/16 threads and a boost clock of 5.2GHz on the high end, down to 4 cores/8 threads and 4.7GHz. TDPs range from a minimum of 15W to a 35W processor on the high end, stretching to 54W.

AMD

AMD

AMD

The nine new chips include:

Ryzen 9 8945HS: 8 cores/16 threads, 5.2GHz (boost); Radeon 780M graphics, 35-54WRyzen 7 8845HS: 8 cores/16 threads, 5.1GHz (boost); Radeon 780M graphics, 35-54WRyzen 7 8840HS: 8 cores/16 threads, 5.1GHz (boost) Radeon 780M graphics, 20-30WRyzen 7 8840U: 8 cores/16 threads, 5.1GHz (boost) Radeon 780M graphics, 15-30WRyzen 5 8645HS: 6 cores/12 threads, 5.0GHz (boost) Radeon 760M graphics, 35-54WRyzen 5 8640HS: 6 cores/12 threads, 4.9GHz (boost) Radeon 760M graphics, 20-30WRyzen 5 8640U: 6 cores/12 threads, 5.1GHz (boost) Radeon 760M graphics, 20-30WRyzen 5 8540U: 6 cores/12 threads, 4.9GHz (boost) Radeon 740M graphics, 15-30WRyzen 3 8440U: 4 cores/8 threads, 4.7GHz (boost) Radeon 740M graphics, 15-30W

Using AMD’s model number “decoder ring” — which Intel recently slammed as “snake oil” — all of the new processors use the Zen 4 architecture and will ship in laptops in 2024. AMD offers three integrated GPUs—the 780M (12 cores, up to 2.7GHz), the 760M (8 cores, up to 2.6GHz) and the 740M (4 cores, up to 2.5GHz)—based upon the RDNA3 graphics architecture and DDR5/LPDDR5 support. Those iGPUs appeared earlier in the Ryzen 7040 mobile chips that debuted earlier this year with the Phoenix NPU.

Interestingly, AMD isn’t announcing any “HX” parts for premium gaming, at least not yet. AMD hasn’t ruled out mixing and matching earlier Zen parts as part of the Ryzen 8000 family, either, executives said.

AMD is also disclosing a third-gen NPU, “Strix Point,” which it will ship sometime later in 2024, presumably within in a next-gen Ryzen processor. AMD didn’t disclose any of the specific Strix Point specs, but said that it will deliver more than three times the generative AI performance of the prior generation.

AMD

AMD

AMD

Ryzen 8040 performance

AMD included some generic benchmark evaluations comparing the 8940H to the Intel Core i9-13900H at 1080p on low settings, claiming its own chip beats Intel’s by 1.8X. (AMD used nine games for a comparison, not really claiming how it arrived at the numbers.) AMD claims a 1.4X performance boost on the same chips, somehow amalgamating Cinebench R23 and Geekbench 6.

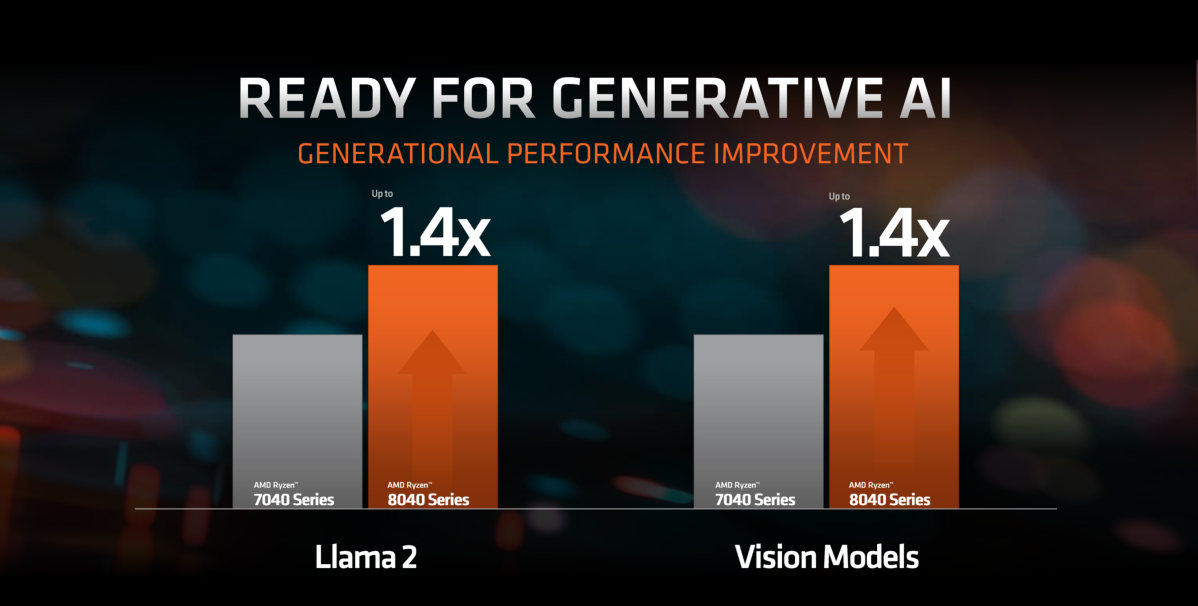

AMD also claimed a gen-over-gen AI improvement of 1.4X on Facebook’s Llama 2 large language model and “vision models,” comparing the 7940HS and 8840HS.

AMD

AMD

AMD

Ryzen AI Software: a new tool for AI enthusiasts

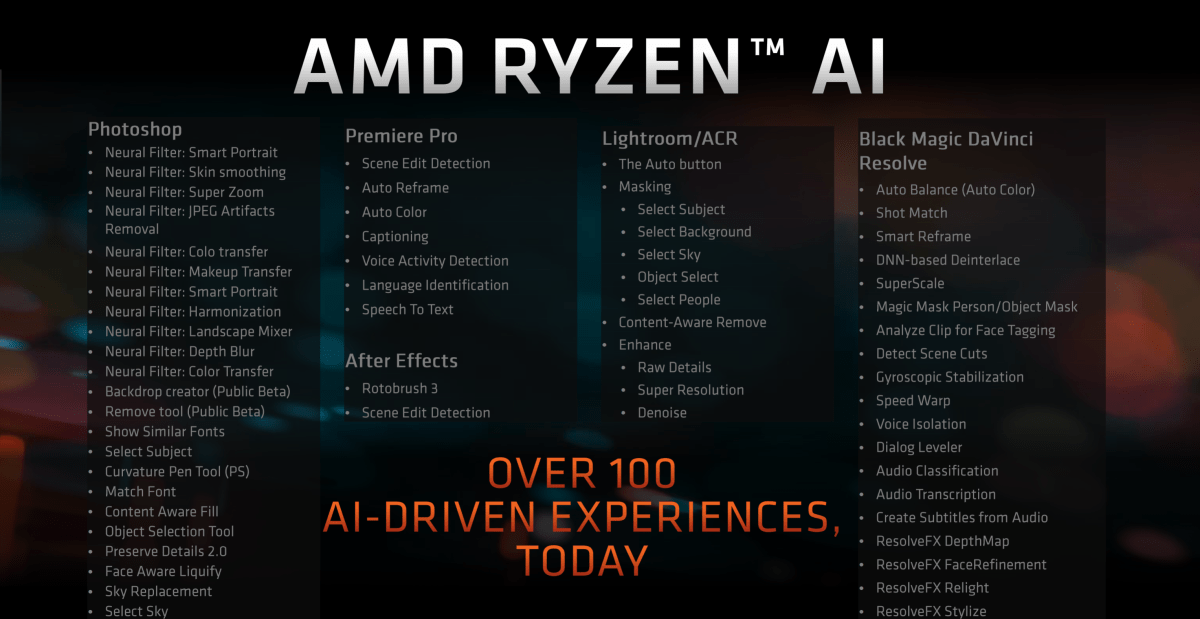

With AI in its infancy, silicon manufacturers don’t have many points of comparison for traditional benchmarks. AMD executives highlighted localized AI-powered experiences, such as the various neural filters found within Photoshop, the masking tools within Lightroom, and a number of tools within BlackMagic’s DaVinci Resolve. The underlying message is that this is why you need local AI, rather than running it in the cloud.

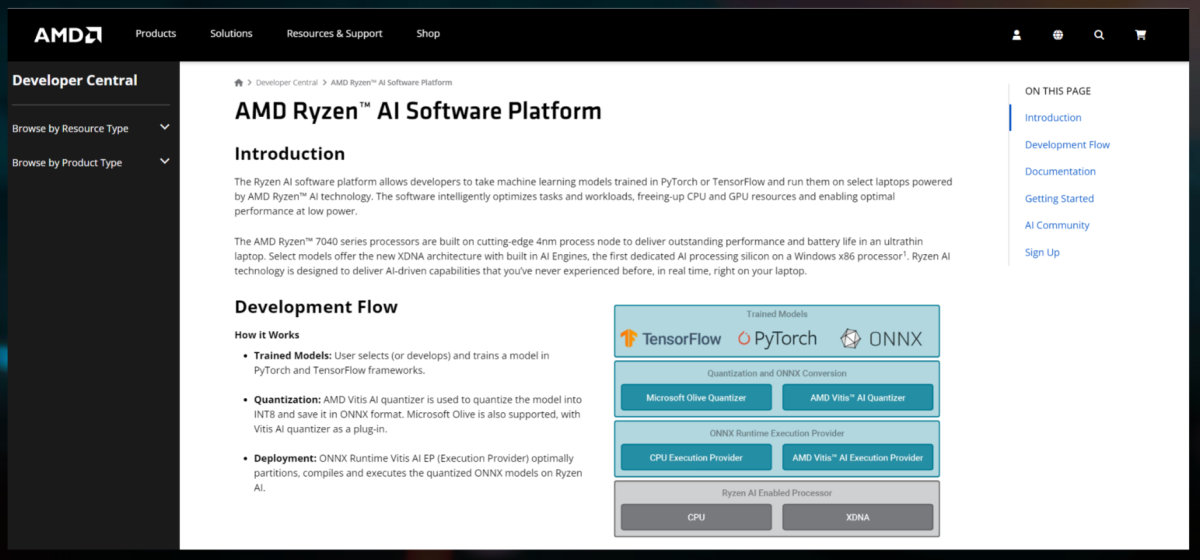

AMD is also debuting AMD Ryzen AI Software, a tool to allow a model developed in PyTorch or TensorFlow for workstations and servers to run on a local AI-enabled Ryzen chip.

The problem with running a truly large large-language model like a local chatbot, voice-changing software, or some other model is that servers and workstations have more powerful processors and more available memory. Laptops don’t. What Ryzen AI Software is designed to do is to take the LLM and essentially transcode it into a simpler, less intensive version that can be run on the more limited memory and processing power of a Ryzen laptop.

Put another way, the bulk of what you think of as a chatbot, or LLM, is actually the “weights,” or parameters — the relationships between various concepts and words. LLMs like GPT-3 have billions of parameters, and storing and executing these (inferencing) takes enormous computing resources. Quantization is a bit like image compression, reducing the size of the weights hopefully without ruining the “intelligence” of the model.

AMD and Microsoft use ONNX for this, an open-source runtime with built-in optimizations and simple startup scripts. What Ryzen AI Software would do would be to allow this quantization to happen automatically, saving the model into an ONNX format that is ready to run on a Ryzen chip.

AMD

AMD

AMD

Executives said that this Ryzen AI Software tool will be released today, giving both independent developers and enthusiasts an easier way to try out AI themselves.

AMD is also launching the AMD Pervasive AI Contest, with prizes for development for robotics AI, generative AI, and PC AI. Prizes for PC AI, which involves creating unique apps for speech or vision, begin at $3,000 and climb to $10,000.

All this helps propel AMD in what is still early in the race to establish AI, especially in the client PC. Next year promises to solidify where each chip company fits as they round the first turn.

This story was updated at 10:26 AM on Dec. 7 with additional detail.

Author: Mark Hachman, Senior Editor

As PCWorld’s senior editor, Mark focuses on Microsoft news and chip technology, among other beats. He has formerly written for PCMag, BYTE, Slashdot, eWEEK, and ReadWrite.

Recent stories by Mark Hachman:

No, Intel isn’t recommending baseline power profiles to fix crashing CPUsApple claims its M4 chip’s AI will obliterate PCs. Nah, not reallyIntel says manufacturing problems are hindering hot Core Ultra sales