Snapchat is introducing new parental controls that will allow parents to restrict their teens from interacting with the app’s AI chatbot. The changes will also allow parents to view their teens’ privacy settings, and get easier access to Family Center, which is the app’s dedicated place for parental controls.

Parents can now restrict My AI, Snapchat’s AI-powered chatbot, from responding to chats from their teen. The new parental control comes as Snapchat launched My AI nearly a year ago and faced criticism for doing so without appropriate age-gating features, as the chatbot was found to be chatting to minors about topics like covering up the smell of weed and setting the mood for sex.

Snapchat says the new restriction feature builds on My AI’s current safeguards, including “including protections against inappropriate or harmful responses, temporary usage restrictions if Snapchatters repeatedly misuse the service, and age-awareness.”

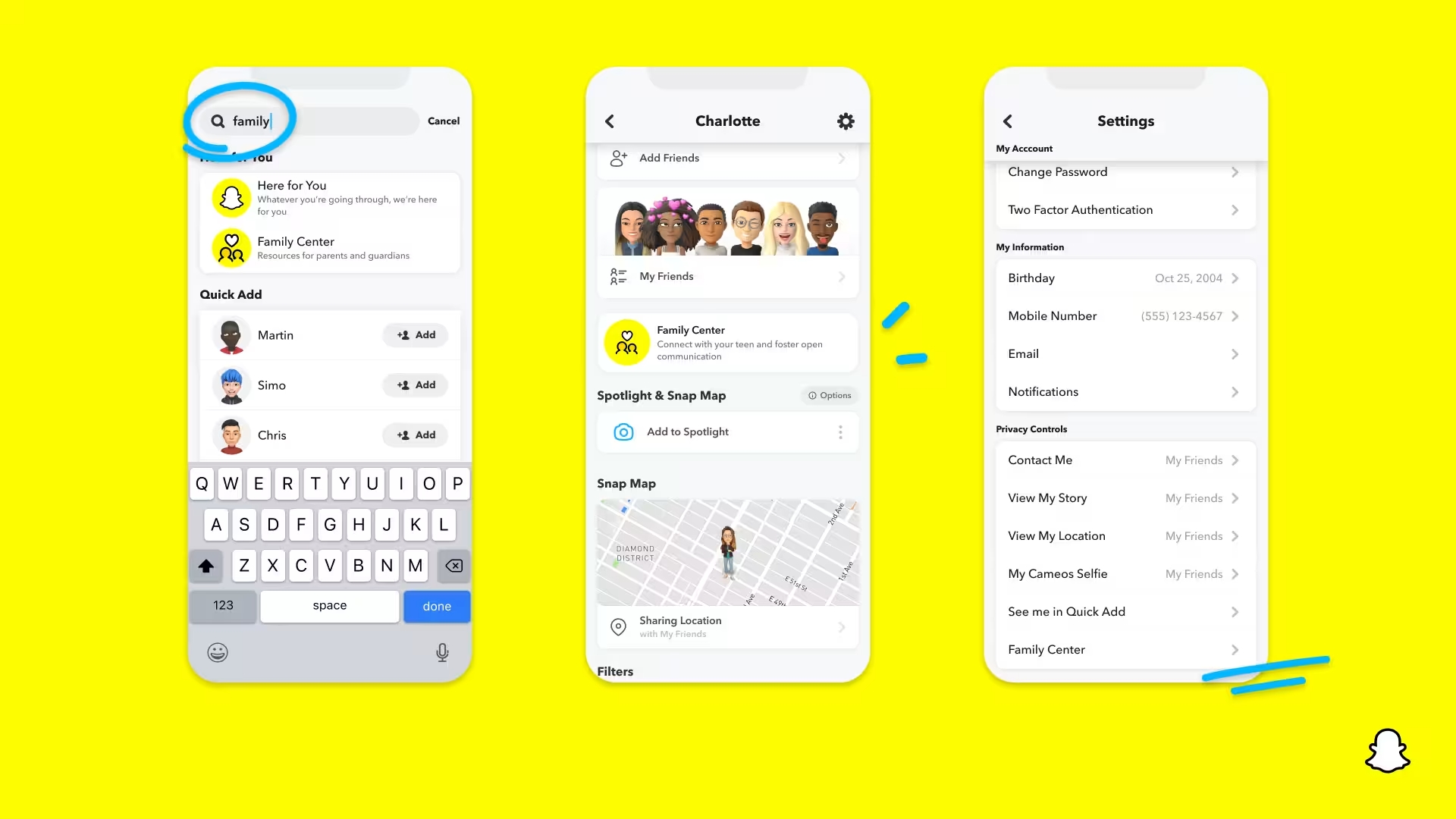

In addition, parents will now be able to see their teens’ safety and privacy settings. For instance, a parent can see if their teen has the ability to share their Story with their friends or a smaller group of select users. Plus, a parent can see who is able to contact their teen on the app by viewing their contact settings. Parents can now also see if their teen is sharing their location with their friends on the Snap Map.

As for parents who may be unaware about the app’s parental controls, Snapchat is making Family Center easier to find. Parents can now find Family Center right from their profile, or by heading to their settings.

“Snapchat was built to help people communicate with their friends in the same way they would offline, and Family Center reflects the dynamics of real-world relationships between parents and teens, where parents have insight into who their teens are spending time with, while still respecting the privacy of their personal communications,” Snapchat wrote in the blog post. “We worked closely with families and online safety experts to develop Family Center and use their feedback to update it with additional features on a regular basis.”

Snapchat launched Family Center back in 2022 in response to increased pressure on social networks to do more to protect young users on their platforms from harm both in the U.S. and abroad.

The expansion of the app’s parental controls come as Snapchat CEO Evan Spiegel is scheduled to testify before the Senate on child safety on January 31, alongside X (formerly Twitter), TikTok, Meta and Discord. Committee members are expected to press executives from the companies on their platforms’ inability to protect children online.

The changes also come two months after Snap and Meta received formal requests for information (RFI) from the European Commission about the steps they are taking to protect young users on their social networks. The Commission has also sent similar requests to TikTok and YouTube.

Snapchat isn’t the only company to release features related to child safety this week, as Meta introduced new limitations earlier this week. The tech giant announced that it was going to start automatically limiting the type of content that teen Instagram and Facebook accounts can see on the platforms. These accounts will automatically be restricted from seeing harmful content, such as posts about self-harm, graphic violence and eating disorders.

Meta and Snap latest to get EU request for info on child safety, as bloc shoots for ‘unprecedented’ transparency

Meta to restrict teen Instagram and Facebook accounts from seeing content about self-harm and eating disorders