Image: Gordon Mah Ung/IDG

Image: Gordon Mah Ung/IDG

Admit it. You love underdog tales. The Cleveland Cavaliers coming back from a 3-1 deficit against the Golden State Warriors. The New York Giants defeating the 18-0 New England Patriots, and the Average Joes beating the heavily favored Purple Cobras in the dodgeball finals.

Mentioned in this article

AMD Ryzen 7 1800X processor

Price When Reviewed:$499.00Best Prices Today:$235 at Amazon

Price When Reviewed:$499.00Best Prices Today:$235 at Amazon

Well, you can now add AMD’s highly anticipated Ryzen CPU to that list of epic comebacks in history. Yes, disbeliever, AMD’s Ryzen almost—almost—lives up to the hype. What’s more, it delivers the goods at an unbeatable price: $499 for the highest-end Ryzen 7 1800X. That’s half the cost of its closest Intel competitor.

But before AMD fans rush to rub it into Intel fans’ faces, there’s a very important thing you need to know about this CPU and its puzzling Jekyll-and-Hyde performance. For some, we dare say, it might even be a dealbreaker. Read on.

Gordon Mah Ung

Gordon Mah UngThe boxed Ryzen 7 1800X and Ryzen 7 1700X won’t come with stocker coolers.

What Ryzen is

We can’t get into a review of Ryzen without first recalling the tragic circumstances that came before it: AMD’s Bulldozer and Vishera CPUs sold under the FX brand. Intended as a competitive comeback to Intel’s own epic comeback chips—the Core 2 and Core i7—AMD’s FX series instead went down in flames performance-wise.

The failure of Bulldozer and Vishera left AMD languishing for years, all but abandoning the high end and nursing itself on an ARM CPU for servers. In fact, the last time AMD had a truly competitive CPU, people still listened to INXS, the Weakest Link was a thing, and George W. Bush was president. The technical term for that is: a hell of a long time ago.

Ryzen is nothing like its star-crossed predecessors, though. The FX CPUs used a technique called clustered multithreading (CMT) that shared key components of the chip; they were built on an uncompetitive 32nm, and later 28nm, process; and the 8-core versions more often than not lost to Intel’s 4-core chips.

As anyone who has wallowed in failure only to return to greatness knows, tragedy and loss only make an underdog story sweeter. With Ryzen, AMD rebooted its CPU design, tossing aside CMT. It even adopted a technique from Intel’s playbook called simultaneous multithreading (SMT), which virtualizes CPU resources.

AMD

AMDAMD’s new Ryzen CPU does away with the shared cores of the FX line in favor of stand-alone cores with simultaneous multithreading.

Whereas AMD’s design once had every two cores share resources, each Ryzen core is now a distinct entity built into a four-core complex. In the shot below, two core complexes make up an 8-core Ryzen chip.

AMD

AMDEach Zen core complex is made up of four individual CPUs. Two of these complexes make up the 8-core Ryzen chip in the preceding image.

Also gone are the 32nm process of Bulldozer and the 28nm process of Vishera. Ryzen CPUs are built on a state-of-the-art 14nm process by AMD’s spun-off fab, Global Foundries. In short, the core design seems to have set the stage for an AMD return to glory.

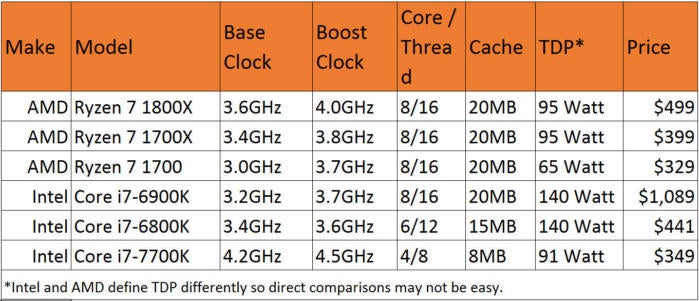

IDG

IDGAMD’s Ryzen 7 lineup compared to Intel’s CPUs.

Ryzen: Heavy-duty CPU, light-duty chipset

It should be pointed out that Ryzen’s chipset isn’t exactly heavy-duty. Because Ryzen is more of a system-on-chip (SoC) than a CPU, it contains many interface features on-chip, which are augmented by a particular AM4 socket chipset (to learn more about the various chipsets, see our guide to choosing an AM4 motherboard.) The upshot is that while Ryzen provides up to eight physical cores, the surrounding infrastructure is more consumer-oriented. Ryzen has 24 PCIe lanes total, 16 of which are dedicated to the GPU. If you go with the highest-end motherboard chipset, the X370, in order to run two video cards, that single x16 is split into two x8 connections.

The remaining PCIe lanes can be used by the motherboard maker for NVMe or other I/O options. That’s not much different from what Intel does with the current Core i7-7700K ($349 on Amazon), which also has 16 lanes of PCIe for the GPU. While Intel’s highest-end consumer Z270 chipset appears to have a lot more I/O with up to 24 lanes of PCIe, a bottleneck between the consumer-grade chipset and CPU keep it from being utilized.

For most people, there is still plenty of speed on tap with Ryzen and Core i7-7700K. But for those who need insane numbers of PCIe lanes, for, say, multiple NVMe drives that will be used simultaneously, Intel’s Broadwell-E and its X99 chipset have the advantage.

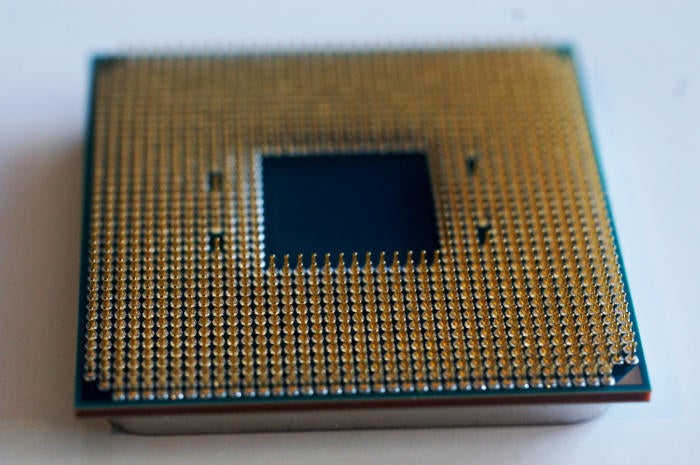

Gordon Mah Ung

Gordon Mah UngIn order of appearance: AMD’s FX, an AMD Ryzen, an Intel Core i7-5960X, and an Intel dual-core Kaby Lake CPU.

Broadwell-E and Haswell-E feature up to 40 lanes of PCIe directly wired to the CPU, plus an additional eight lanes of PCIe in the X99 chipset. But, again, for the vast majority of consumers, even prosumers, that’s overkill.

AMD has already said Ryzen exceeds its goal of a 40-percent increase over previous designs—in fact, it has hit a 52-percent increase in clock-for-clock performance over the Piledriver cores. But no one cares about that. The only thing you want to know is how it does against Intel.

Read on for how we tested.

How we tested

For the benchmark-o-rama, I set up four separate PCs. All featured clean installs of the latest version of 64-bit Windows 10. Each of the PCs was also built using the same SSD and GPU, and the latest BIOS was used on each board.

I turned to what we believe are Ryzen’s natural competitors: Intel’s $1,089 8-core Broadwell-E Core i7-6900K; the $441 6-core Broadwell-E Core i7-6800K; and the $349 4-core Kaby Lake Core i7-7700K. And, although its well beyond its prime, I also included an 8-core AMD FX-8370, which is currently the top Vishera-based CPU you can get without wading into the crazy range (meaning AMD’s insane FX-9590 chip, which works with only a handful of motherboards due to its excessive power consumption).

For the pair of Broadwell-E processors, I tested on an Asus X99 Deluxe II board. I used an Asus Z270 Maximus IX Code for the Kaby Lake chip. I paired the Vishera with an ASRock 990FX Killer. The Ryzen CPUs were tested with an Asus Crosshair VI Hero board.

I used a Founders Edition GeForce GTX 1080 on all of the builds, and the clock speeds were checked for consistency.

Gordon Mah Ung

Gordon Mah UngThe AMD FX on the lower left has more pins than the AMD Ryzen on the lower right. The two Intel chips above put the pins in the socket.

RAM configuration

I opted to test each with 32GB of RAM, with the memory controllers fully loaded using standard JEDEC-speed RAM. On the Ryzen and Kaby Lake systems, that meant four DIMMs of DDR4/2133 for a total of 32GB of RAM. The Broadwell-E systems were stuffed with eight DIMMS of DDR4/2133 for a total of 32GB RAM. The FX CPU had four DDR3/1600 DIMMS in it for a total of 32GB of RAM. The Ryzen, Kaby Lake, and FX machines were in dual-channel mode, whereas the Broadwell-E box was in quad-channel mode.

Cooling configuration

One final disclosure: I expected to test all the builds using closed-loop coolers. But because AMD didn’t send me a CLC for Ryzen until late into testing, I had to test all the PCs using air cooling. AMD fans might suspect this hobbled the Ryzen parts, which have a mode called eXtended Frequency Range (XFR) that allows the chip to clock up to the capabilities of the cooler.

I should add here that XFR only really adds up to 100MHz to the chip’s speeds today. The Ryzen 7 1800X on XFR, for example, would hit 4.1GHz over its Precision Boost speed of 4GHz. The Noctua air cooler I used is itself a fairly burly cooler, and I did see XFR speeds kicking in on occasion.

Read on for benchmarks, benchmarks, and more benchmarks.

How fast is it? There’s only one way to find out

Our benchmarking begins with a battery of productivity tests. First, Cinebench R15.037 for multithreaded performance, then Blender 2.78a and POV-Ray for image-rendering chops. We add Handbrake and Adobe Premiere CC 2017 for video encoding.

Cinebench R15.037 performance

First up is Maxon’s Cinebench R15 benchmark. This test is based on Maxon’s Cinema4D rendering engine. It’s heavily multithreaded, and the more cores you have, the more performance you get. AMD has been showing off this benchmark for a couple of weeks now, with AMD’s 8-core Ryzen 7 1800X exceeding Intel’s 8-core Broadwell-E chip.

My own tests don’t quite match AMD’s results. First, my Core i7-6900K scores are slightly faster than AMD’s. AMD’s own tests, in fact, showed the midrange Ryzen 7 1700X matching Intel’s mighty 8-core. While I didn’t have time to test the $400 Ryzen 7 1700X, I did test the lower-wattage and lower-priced Ryzen 7 1700 ($330 on Amazon).

The insanity? Not only do we have a $500 Ryzen besting an Intel chip that costs twice as much, the $330 Ryzen comes pretty damn close. This test is all kinds of win for AMD—and Intel fans, it’s now independently confirmed. Damn.

PCWorld

PCWorldCinebench R15 shows Ryzen is indeed a multi-threaded monster of a CPU.

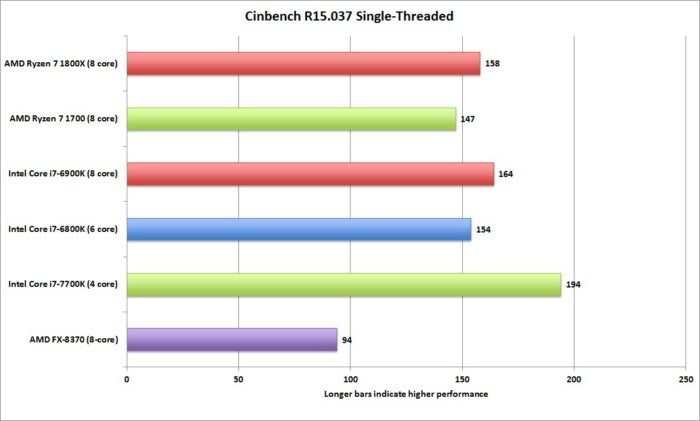

Cinbench R15 also features a single-threaded test that only loads up a single CPU core. Although the results here are quite good for AMD, the winner is the Core i7-7700K chip. We can attribute that to the higher clock speed of the Intel CPU (4.2GHz to 4.5GHz) and the greater efficiency of Intel’s newest core at work. Still, Ryzen can stand tall against Intel’s best and brightest.

PCWorld

PCWorldCinebench single-threaded performance shows the new Ryzen chips can also hang with the best Intel CPUs.

My final Cinebench test involved an update that came out in December, at the request of AMD, Maxon officials told PCWorld. When asked what changed between Cinebench R15.037 and Cinebench R15.038, Maxon declined details, saying they were “proprietary” to AMD. Strangely, AMD officials told me they didn’t even know there was a change. In fact, the company’s recent demonstrations were done on the older version.

Still, when a benchmark is changed to correct something for a CPU, alarms get set off and conspiracy theories get spawned, so I also ran Cinebench 15.038 on all the CPUs. The result? Basically nothing changed for all CPUs involved. Cinebench, of course, was one of the benchmarking applications named in the Federal Trade Commission’s suit against Intel for allegedly cooking tests to hurt AMD CPUs. Intel ultimately settled, but Cinebench has long been blamed by AMD fans for being crooked. After seeing these results, it appears most of the blame is misplaced.

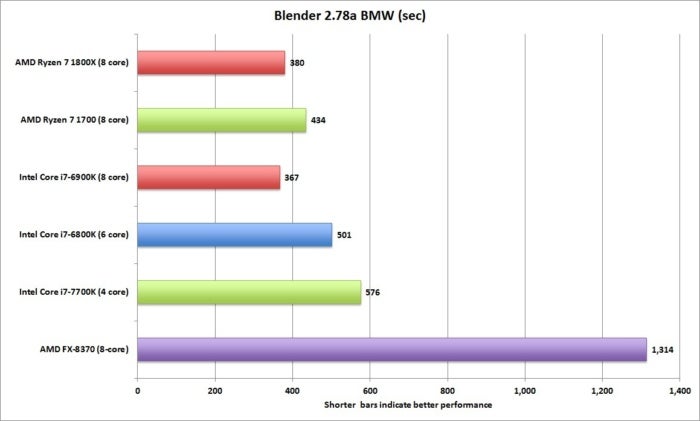

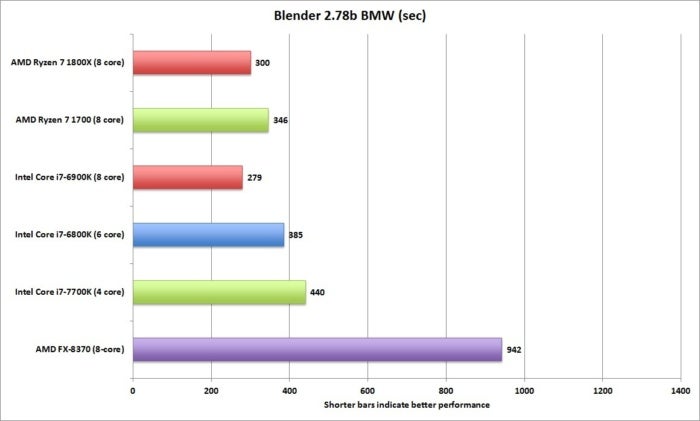

Blender 2.78a performance

The Ryzen hype train kicked off last year when AMD showed its chip equaled Intel’s 8-core in the open-source Blender render engine. It’s a popular application used in many indie films for effects. You’d expect Ryzen to perform well here, and it does. Although I’m seeing the Ryzen 7 1800X run just a tad behind the Core i7-6900K, it’s not enough to justify spending twice as much, is it? And that’s basically all win for AMD.

PCWorld

PCWorldBlender performance shows the $500 Ryzen 7 1800X is just slightly slower than the $1,089 Core i7-6900K CPU.

The thing is, at CES the media heard that maybe, just maybe, the issue was a bug in Blender that had been documented for some time. Birds whispered that Ryzen might not perform as well once the bug was ironed out. Fortunately, the bug was ironed out and a new version of Blender was compiled and released. To see if the birds were right, I also ran the CPUs on Blender 2.78b and saw a significant increase in performance for the Intel CPUs—but the AMD FX and the Ryzen also benefited. I left the axis scale the same so you could see the improvement.

In the end, though, it didn’t matter, and when you factor in price, Ryzen is all over this one too.

PCWorld

PCWorldBlender was recently updated to fix several performance bugs, but the fixes appear to benefit all CPUs we tested.

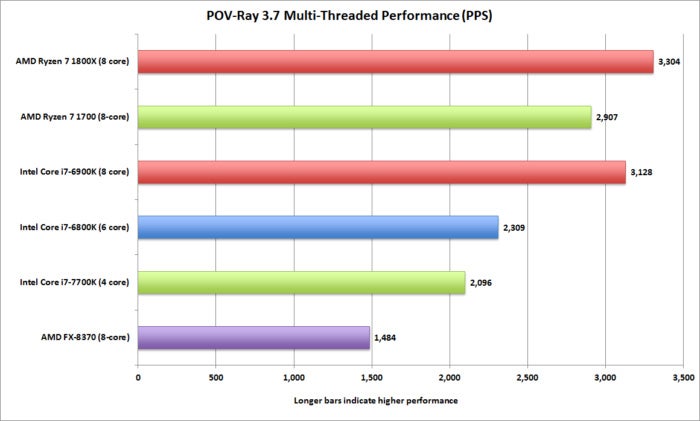

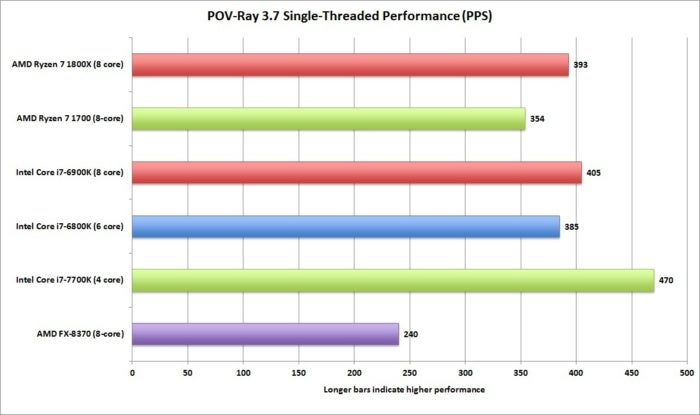

POV-Ray performance

Our last rendering test uses the POV-Ray Raytracer. This open-source benchmark dates back to the days of the Amiga and, like the two previous tests, loves cores. First up is the multithreading performance test using the built-in benchmark scene. The result is presented as pixels per second and the higher the number, the faster the render.

It is, again, all good news for AMD, as the Ryzen 7 1800X is slightly faster than the Intel CPU that costs twice as much. Even better, peep that Ryzen 7 1700, which is almost a third the cost of the Core i7-6900K chip. That’s an ouch in every single way you can measure it.

PCWorld

PCWorldPOV-Ray agrees that Ryzen gives you a ton of performance for the buck.

POV-Ray also includes a single-threaded test that shows the Broadwell-E chips pulling slightly ahead of the Ryzen 7 1800X. The fastest, no surprise, is the newest Core i7-7700K CPU, which runs at a higher clock speed and features Intel’s newest core. The truth is, though, you won’t be running it in single-threaded mode when doing a render, you’ll be using it in multithreaded mode.

PCWorld

PCWorldThe Intel chips pull ahead in the single-threaded test but it’s mostly academic since you won’t be using it this way.

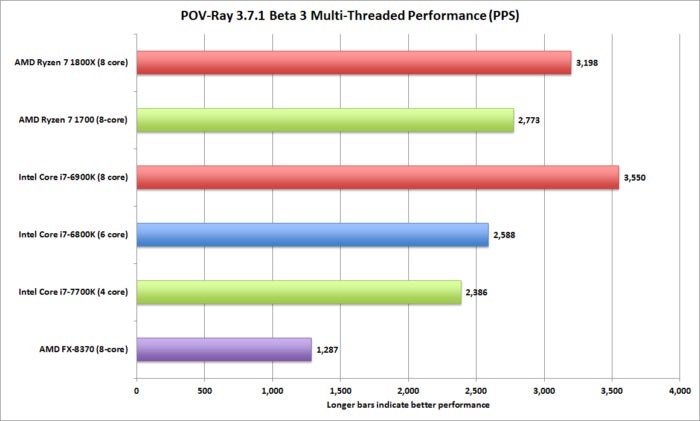

But wait, there’s more. Like Cinebench and Blender, POV-Ray has also been recently updated. The version is in beta but available for testing. I downloaded and ran the POV-Ray 3.7.1 beta 3 and gave it a go on all of the machines. Unlike Cinebench and Blender, whose updates don’t move the needle at all, I saw a sudden swing here for the Broadwell-E chips. While version 3.7 had the Ryzen chips ahead, with 3.7.1 beta 3 they were suddenly behind: The Ryzen 7 1800X was slower than the Core i7-6900K, while the Ryzen 7 1700 came in awfully close to the 6-core Core i7-6800K.

PCWorld

PCWorldPOV-Ray 3.7.1 beta 3 favors Intel.

What gives? I spoke with POV-Ray coordinator Chris Cason, who told me the current beta adds AVX2 support, and while 3.7 was compiled with Microsoft’s Visual Studio 2010, the beta version is compiled with Visual Studio 2015.

POV-Ray was fingered by Extremetech.com’s Joel Hruska a few years ago for appearing to favor Intel. POV-Ray officials, though, have long denied it. Cason told PCWorld: “We don’t care what hardware people use, we just want our code to run fast. We don’t have a stake in either camp—in fact, for the past few years, I’ve been running exclusively AMD in my office. I’m answering this email from my dev system, which has an AMD FX-8320.”

So conspiracy theorists, go at it.

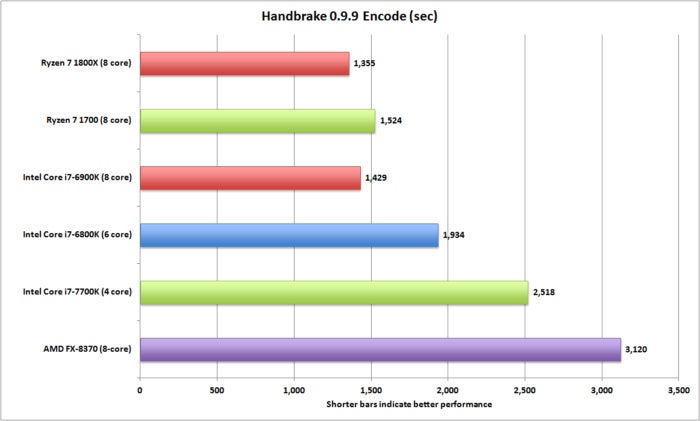

Handbrake performance

Moving on from 3D rendering tests, my next test is the popular Handbrake test. This is a wonderful free encoder that’s handy for converting video and is heavily multithreaded. It also has support for Intel’s QuickSync, but my tests today will stick with the standard CPU test. For that I take a 30GB 1080p MKV file and convert it using the Android preset. This version is older than what’s available now, but still a relevant test for encoding performance. And yes, Intel fans, enabling QuickSync on the Core i7-7700K (by turning on the integrated graphics in your board’s BIOS) would let the Kaby Lake chip wail on all others here.

But, if you use your CPU for encoding with Handbrake, and not the graphics chip, here’s what you’d get: a Ryzen-colored win with unicorns and rainbows. Sure, the Ryzen 7 1800X is only a tad faster, but half the price amirite?

PCWorld

PCWorldThe 8-core CPUs easily win our Handbrake encoding test.

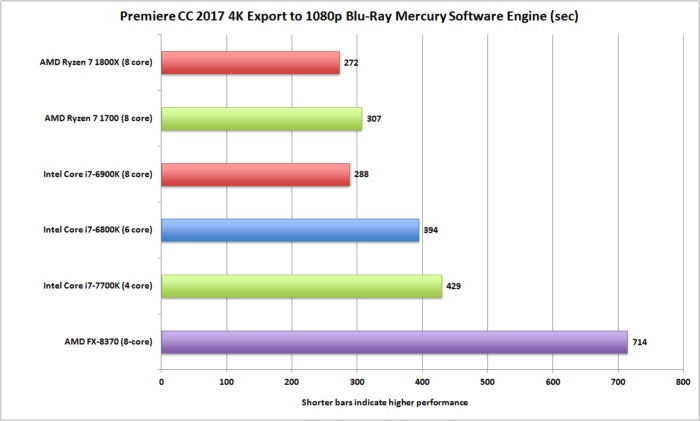

Adobe Premiere CC 2017 performance

Here’s why I think the Handbrake encode is pretty valid: It’s pretty close to the results using Adobe Premiere Creative Cloud 2017. For this project, I take a real-world 4K video shot by our video team on a Sony A7S and encode it using the 1080p Blu-ray preset in the Premiere. For the Premiere test, I actually put the project on a Plextor M8Pe drive to ensure the drive speed of the SATA SSD I used wasn’t a bottleneck.

Because I’m changing the resolution of the video, I use the “Maximum Render Quality” option. For this encode, I use the Mercury software engine in Premiere, which hits the CPU cores rather than the GPU. This may seem unrealistic, but driver drift for the GPU can impact render quality, and some still feel the CPU encodes look better. Either way, it’s better to have more cores. The Ryzen 7 1800X has a bit more speed than the Intel chip that costs twice as much. The Ryzen 7 1700 that costs about a third of the Intel chip is looking pretty, too. I mean damn.

PCWorld

PCWorldThe Mercury software engine in Premiere hits the CPU cores rather than the GPU.

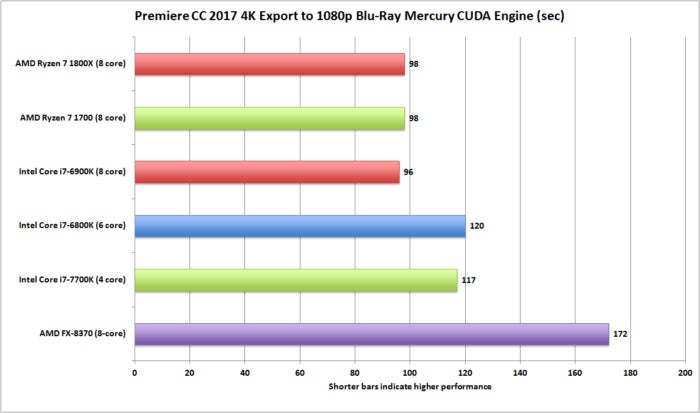

But wait, video editors are tsk-tsk-ing the results because everyone uses the GPU for the render today. That’s a good point, so I rendered the project using the Mercury Playback engine using Nvidia’s CUDA. GPU rendering does indeed take off a nice chunk of time from the render. Keep in mind, the video (which you can watch here) for this test is 4K, but one minute, 43 seconds long. If this were a one-hour video, you’d throw money at the CPU to cut down your render times. The question is, which CPU would you throw the money at? The one that basically gets you a “free” $500 GPU, or the one that doesn’t?

PCWorld

PCWorldThe GeForce GTX 1080 takes a chunk off the render time, but you still get the best performance from the 8-core chips.

Read on for the surprising gaming performance of Ryzen.

Gaming performance

Let’s move on to what may be the second-most important category for Ryzen: gaming. That’s pretty much where the story goes from incredible performance per dollar to head-scratching, even maddening, results.

Gordon Mah Ung

Gordon Mah UngAMD’s Ryzen 7 series features 8 cores and 16 threads of performance.

3DMark performance

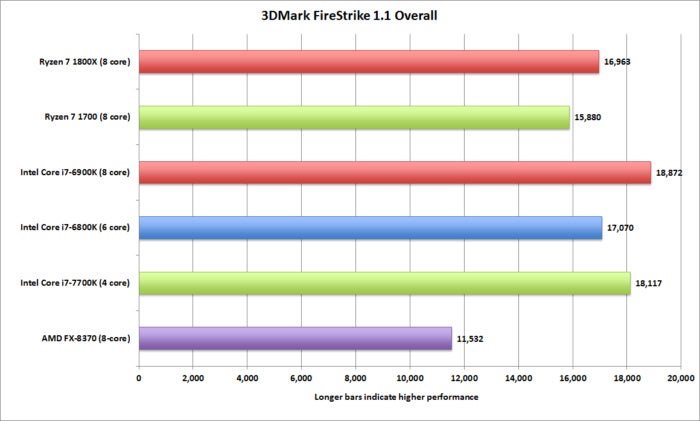

First up is the always popular 3DMark test. Now owned by UL, this is a synthetic performance test that measures gaming. Yes, it’s synthetic, but for the most part, it’s widely regarded as being neutral ground. The first result is the overall score in 3DMark FireStrike. The Core i7-6900K takes the top spot, with the Core i7-7700K taking the second spot, and the Ryzen 7 1800X a close third. It’s pretty much a yawner.

PCWorld

PCWorld3DMark’s overall score factors in the physics and graphics score for the final verdict.

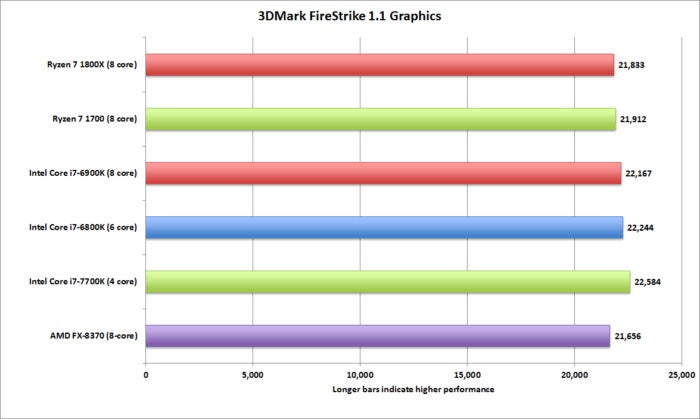

3DMark’s Graphics sub score is designed to stress the graphics card. If 3DMark is doing its job, we should see very little difference among our machines, as we used identical GeForce GTX 1080 cards for our testing. Everything is right as rain. Only the FX is slightly slower, which could be because, well, it’s an AMD FX. Or maybe it’s the PCIe 2.0 on the platform. Either way, nothing to get excited about.

PCWorld

PCWorldThis shows 3DMark is functioning perfectly as a GPU benchmark. With the exact same cards in each machine, the scores should be just about the same, too.

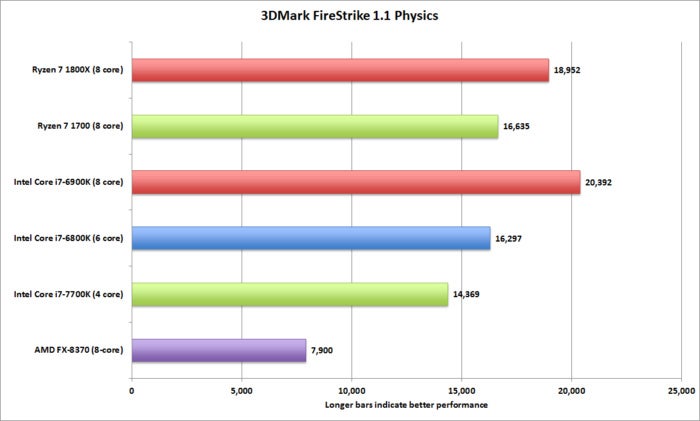

3DMark’s Physics test stresses core count, and we see the 8-core chips ahead by a good margin. The low-wattage Ryzen 7 1700 actually appears to break even with the 6-core Core i7-6800K here. The lower clock speed of the R7 1700 could be hurting it. And FX, yeah, “8 cores” indeed.

PCWorld

PCWorldThe physics test shows you in theory what you get from more cores. In reality, few games actually use that many cores though.

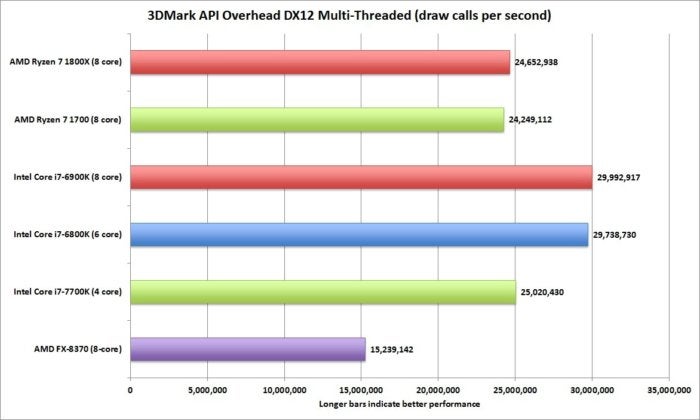

3DMark also includes a test of a CPU’s capabilities when tasked with issuing draw calls under DirectX 12. The results clearly put the Intel chips in the lead. Not only is the Core i7-7700K just about dead-even with both Ryzen chips, the 6- and 8-core parts are a sizable distance ahead. If you’re wondering why the 6-core Broadwell is neck-and-neck with the 8-core part, I’ve found this particular test doesn’t scale much beyond six cores.

PCWorld

PCWorldThe API test in 3DMark measures how many draw calls a particular PC can issue in Microsoft’s new DirectX 12.

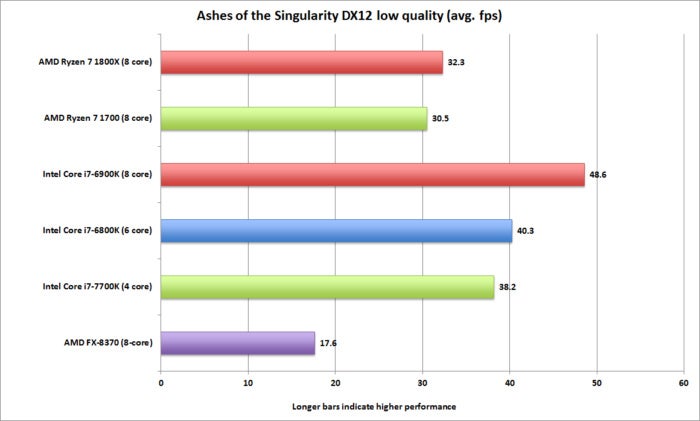

Ashes of the Singularity DX12 performance

The good news is, we also have a real-world DX12 game in Ashes of the Singularity. This game is basically the tech demo for what can be done in DirectX 12, and it loves CPU cores. For this test run, I ran at 1920×1080 resolution with the visual quality set to low. Ashes has a GPU-focused mode and a CPU-focused mode. I chose the latter because I wanted to see the frame rate when a crazy amount of objects (and draw calls) are thrown at a game. The result was again confusing. The Core i7-6900K walks away from the pack, and even the 6-core Core i7-6800K shows Kaby Lake what-for in the test. The Ryzens are oddly slow considering they have more cores than both the Core i7-6800K and the Core i7-7700K. To be fair, much like 3DMark, I haven’t seen this test scale to crazy amounts. Right before we went to press, AMD send out a statement from the Ashes developer Oxide:

“Oxide games is incredibly excited with what we are seeing from the Ryzen CPU. Using our Nitrous game engine, we are working to scale our existing and future game title performance to take full advantage of Ryzen and its 8-core, 16-thread architecture, and the results thus far are impressive,” said Stardock and Oxide CEO Brad Wardell. “These optimizations are not yet available for Ryzen benchmarking. However, expect updates soon to enhance the performance of games like Ashes of the Singularity on Ryzen CPUs, as well as our future game releases.”

So, not valid?

PCWorld

PCWorldAshes of the Singularity is the premier tech demo for what can be done DirectX 12.

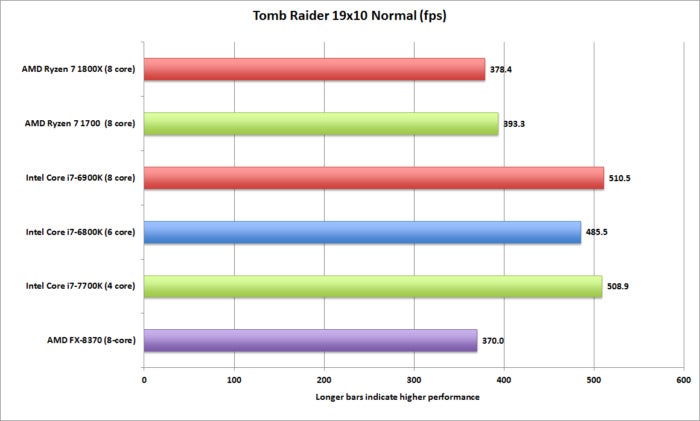

Tomb Raider performance

I decided to look at Ryzen’s performance using the older version of Tomb Raider. Those looking at the theoretical performance of a CPU in games typically want to take the graphics card out of the equation by running the game at lower settings or even lower resolutions than one would normally use with their given hardware. For this test, I ran Tomb Raider at 1920×1080 resolution at the normal setting. The performance gap again put Ryzen in a bad spot. In fact, it’s frankly a very puzzling result. One can argue that when you’re pushing in excess of 300 or 400 frames per second, it’s kinda pointless, but why isn’t Ryzen, which so handily matches Intel’s Broadwell-E in other tests, right up there with Broadwell-E?

PCWorld

PCWorldTomb Raider shows nothing but bad news for Ryzen.

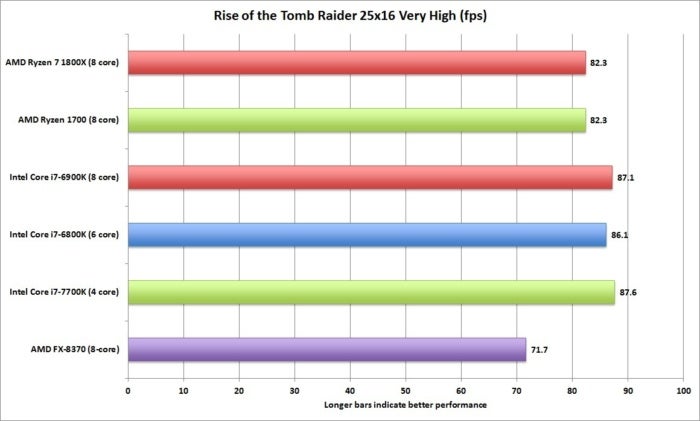

Rise of the Tomb Raider performance

Let’s move on to Rise of the Tomb Raider. Rise is newer and tougher on the GPU. At 1920×1080 and the medium setting, we’re again seeing rather disappointing performance numbers for Ryzen. I’d expected Ryzen to be near lock-step with Broadwell-E but it’s not even close.

PCWorld

PCWorldRise of the Tomb Raider almost mirrors our Tomb Raider results.

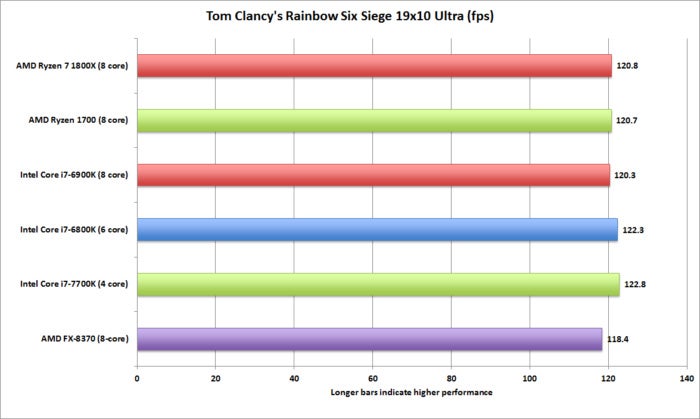

Tom Clancy’s Rainbow Six Siege performance

Moving away from Lara Croft, I ran Tom Clancy’s Rainbow Six Siege at 1920×1080 and Medium. We’re pushing into silly frame-rate territory again, but as I said previously, this would normally stress the CPU. It’s how most reviewers would attempt to measure the theoretical gaming performance of a CPU.

PCWorld

PCWorldAnother set of odd results.

Read on for AMD’s take on our results.

So what the hell is going on?

Presented with the most confusing CPU results I’ve seen in 15 years, I asked AMD what could possibly be the issue. I was given an updated BIOS, which had no impact. I was asked if I had a clean install of Windows, which I did. What about turning off the SMT? Umm, and why give up the performance? Was it my motherboard? The result of my using lower JEDEC 2133 RAM speeds vs. 2933? Not exactly.

I did test the Core i7-7700K at DDR4/2933 speeds alongside the Ryzen 7 1800X. While the 1800X jumped from 82 fps to 136 fps, the Kaby Lake chip went from 87 fps to 181 fps. I frankly have no idea why my gaming performance on Ryzen isn’t where I’d expect it to be. My gut says it’s some kind of plumbing issue with PCIe or somewhere outside the cores themselves.

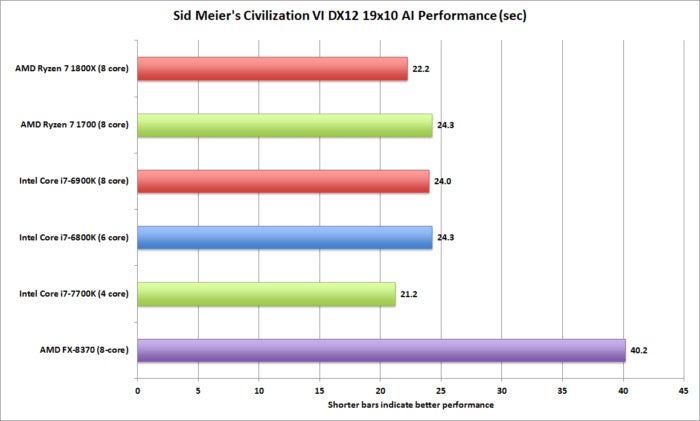

I also ran Sid Meier’s Civilization VI’s AI test. It measures how long it takes to calculate between moves. The result was pretty much a tie (except for FX, of course). I still don’t know what’s going on.

(For the record, Brad Chacos experienced similar baffling results in his own tests of the Ryzen 7 1700.)

PCWorld

PCWorldThe Civ VI test measures how long it takes between moves on each platform. The result was a tie.

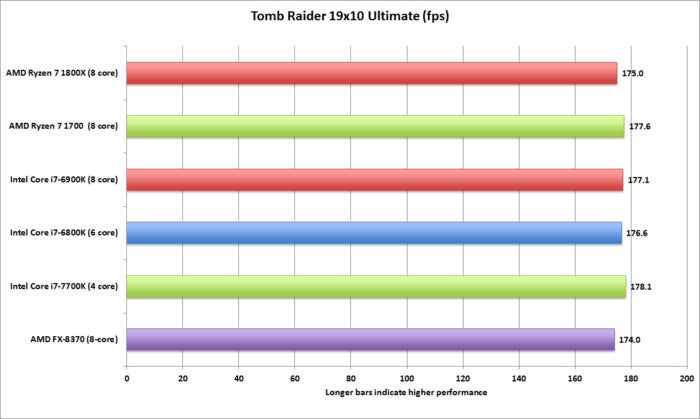

At ‘realistic’ settings it doesn’t matter

But here’s why the anomalies may not bother many (although it probably should): At actual practical resolutions and game settings, it doesn’t seem to matter.

Most of AMD’s public presentations were at 4K resolution using two cards or a mighty Titan X Pascal card. In those scenarios, it was pretty much a tie. You don’t, after all, buy a $500 CPU and $500 GPU to run at 1920×1080 at “normal” settings. Those settings would be great for integrated graphics, but a GeForce GTX 1080? No. And here’s proof: In Tomb Raider, for example, once you move up to the Ultimate preset, they’re all even.

PCWorld

PCWorldTomb Raider using the Ultimate setting puts everyone on the same page.

Once you move Rise of the Tomb Raider up to 2560×1600 resolution, Ryzen is right there with Core i7.

PCWorld

PCWorldAt 2560×1600, Rise of the Tomb Raider sees the gap close up.

And yes, here’s Tom Clancy’s Rainbow Six at 1920×1080 resolution using the Ultra setting. You can again see that everything’s okay, right?

PCWorld

PCWorldSure, you’re looking at the charts above and fist-bumping AMD fans, but the odd performance at lower game settings should still disturb you. Based on the charts above, for example, you’d think it would be fine to buy an Athlon FX-8370 chip.

Very late in the review process, AMD’s John Taylor reached out to PCWorld with a comment on the odd performance we were seeing.

Gordon Mah Ung

Gordon Mah UngRyzen kicks much butt at multi-threaded tasks but gaming performance is puzzling.

Here’s why, AMD says

“As we presented at Ryzen Tech Day, we are supporting 300+ developer kits with game development studios to optimize current and future game releases for the all-new Ryzen CPU. We are on track for 1,000+ developer systems in 2017. For example, Bethesda at GDC yesterday announced its strategic relationship with AMD to optimize for Ryzen CPUs, primarily through Vulkan low-level API optimizations, for a new generation of games, DLC and VR experiences,” Taylor said. “Oxide Games also provided a public statement today on the significant performance uplift observed when optimizing for the 8-core, 16-thread Ryzen 7 CPU design—optimizations not yet reflected in Ashes of the Singularity benchmarking. Creative Assembly, developers of the Total War series, made a similar statement today related to upcoming Ryzen optimizations.

“CPU benchmarking deficits to the competition in certain games at 1080p resolution can be attributed to the development and optimization of the game uniquely to Intel platforms—until now. Even without optimizations in place, Ryzen delivers high, smooth frame rates on all ‘CPU-bound’ games, as well as overall smooth frame rates and great experiences in GPU-bound gaming and VR. With developers taking advantage of Ryzen architecture and the extra cores and threads, we expect benchmarks to only get better, and enable Ryzen to excel at next-generation gaming experiences as well. Game performance will be optimized for Ryzen and continue to improve from at-launch frame rate scores.”

To boil it down: Game developers basically develop for two platforms: Intel’s small socket or Intel’s large socket. AMD, as much as it pains the faithful, has been invisible outside of the budget realm, and the results are showing up in the tests. Whether that’s what’s really going on I can’t say for sure, and I doubt anyone can at the moment, but it’s at least plausible.

Watch PCWorld’s Full Nerd crew discuss Ryzen benchmarks, performance vs. Intel processors, the GeForce GTX 1080 Ti, and more.

Conclusion

Mentioned in this article

AMD Ryzen 7 1800X processor

Price When Reviewed:$499.00Best Prices Today:$235 at Amazon

Price When Reviewed:$499.00Best Prices Today:$235 at Amazon

In the end, AMD’s Ryzen is arguably the most disruptive CPU we’ve seen in a long time for those who need more cores. The CPU basically sells itself when you consider that for the same price as an Intel 8-core Core i7-6900K, you can have an 8-core Ryzen 7 1800X and a GeForce GTX 1080. Hell, you can go a step further and give up a little performance with the Ryzen 7 1700 but step up to a GeForce GTX 1080 Ti—for the same price as that Intel chip. Damn.

But that’s the world Intel has wrought by keeping 8-core CPUs at what many would say are artificially high prices for so long.

Ryzen, however, isn’t a knockout. The gaming disparities at 1080p are sure to spook some buyers. In fact, if you read our Ryzen 7 1700 build against a 5-year-old Core i5 Intel box, you’ll likely be filled with fear, uncertainty, and doubt. Is this really just a game optimization problem as AMD says, or is it some deeper flaw that can’t be corrected?

Still, let’s give AMD credit for what it has pulled off today in essentially democratizing CPU core counts.

Gordon Mah Ung

Gordon Mah UngDo we need to say anything other than you can buy a Ryzen with a GeForce GTX 1080 Ti for almost the same price as a single 8-core Intel CPU?